Concluded: Samsung phones take “artificial” moon photos but Samsung has nothing to apologize for

This article may contain personal views and opinion from the author.

Social media users are accusing Samsung of lying about the Galaxy S23 Ultra’s ability to take photos of the moon, and for reasons unclear to me, everyone seems to be feeling quite strongly about it all…

To rewind the clock for a moment, the whole obsession with “moon shots” seems to have started at some point back in 2019 when the first modern smartphone with a periscope zoom camera (5x optical zoom) launched, managing to wow everyone with surprisingly clear photos of the moon (amongst other, more useful zoom photos).

“Samsung’s space zoom and moon photos are fake”, says Reddit user; conducts an investigation with photo evidence

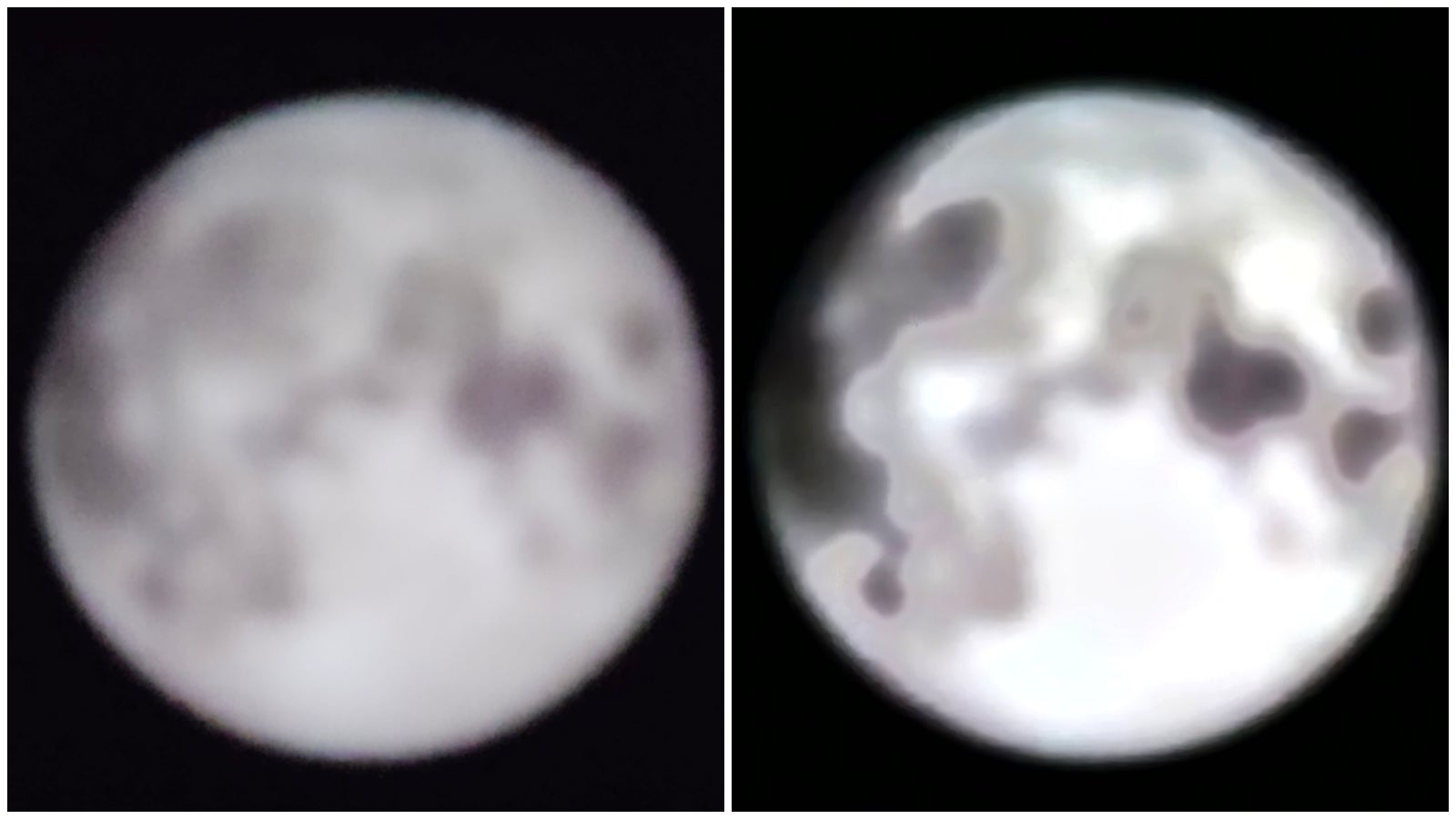

On the left we have a blurry image of the moon, displayed on a laptop; on the right we have what the Galaxy S23 Ultra managed to make out of it.

Before moving on to my own testing and findings, I have to address the Reddit post that sparked the newly-developed interest in this not-so-intriguing conspiracy. So, here’s what the user with handle ibreakphotos did, and what he discovered:

Now, clearly, the photo on the right looks nothing like the image on the left. The way a camera works is… OK, I’m not explaining that, but you get the point - the phone is supposed to take a photo of whatever you’re seeing, and not of something else (unless you’re using those creepy Snapchat filters).

Anyway, of course I had to conduct my own “scientific” experiment. I first did exactly what the Reddit guy did, following his method step by step, and here’s what I discovered:

- The Reddit user downloaded a high-res image of the moon from the internet

- He downsized it to 170x170 pixels and applied some blur, so the original detail is significantly reduced (barely recognizable)

- He uploaded the blurry image on his laptop, moved to the other end of the room, and turned off all the lights

- He took a photo of the blurry image of the moon, and here’s what the Galaxy S23 Ultra managed to produce the photo you see above

The fact check: Testing Samsung’s moon shot with the real moon and with a blurry image of the moon

Now, clearly, the photo on the right looks nothing like the image on the left. The way a camera works is… OK, I’m not explaining that, but you get the point - the phone is supposed to take a photo of whatever you’re seeing, and not of something else (unless you’re using those creepy Snapchat filters).

- After I uploaded the same blurry image of the moon on my MacBook and turned off all lights, I moved to the other end of the room

- I then snapped a 20x zoom photo as it’s exactly at 20x when Samsung’s clever post-processing for zoom photos usually kicks in

On the left is the same blurry image of the moon used in the Reddit experiment, which I loaded on my MacBook; on the right is the Galaxy S23 Ultra's photo of the image at 20x zoom. Nothing fishy so far - the Galaxy S23 Ultra's photo actually made the moon look less like the moon.

So, to my relief and disbelief, Samsung wasn’t trying to trick me. The Galaxy S23 Ultra tried to make something out of the blurry blob but ended up with an even worse-looking photo, which proved the claims for fake moon shots were… fake. Until I tried something slightly different.

- After I uploaded the blurry image of the moon on my MacBook and turned off all lights, I moved to the other end of the room

- I then stepped way back into the hallway and went well past the 30x zoom mark (50x,100x) to snap another photo of the blurry moon on my MacBook monitor, and voila…

On the left is the very same blurry image of the moon as displayed on my MacBook screen; on the right is what the Galaxy S23 Ultra managed to capture when I moved farther back and took a photo beyond 25x zoom - I think it was 50x.

For some reason, the Galaxy S23 Ultra’s post-processing magic decided it was time to kick in at a longer focal length (again, this usually happens at 20x zoom), giving me a completely different photo - you guessed it, reminiscent of what our Reddit hero managed to get in his own experiment. A full moon, full of detail, craters, and all the other features of the moon, which… I have no idea what they really are.

But I went the extra mile. In fact, 239,000 miles if we consider the distance between Earth and the moon.

Bear in mind, I'm doing my best to keep the moon in focus, while also trying to adjust the exposure (using the slider) to get a clear view. That's because unlike in photos, where AI is able to recognize the moon, lock focus, and enhance the image, the same magic is way more difficult to pull off in a video - which is many images moving in real time.

But speaking of AI and how it can alter the appearance of the moon, let’s move on to Samsung’s own explanation…

I tricked my Galaxy S23 Ultra into taking a clear photo of a blurry moon; or did the phone trick me? Samsung responds to accusations of deceiving users, confirming it uses AI to improve moon photos

Samsung responds to criticism over fake moon photos. The answer is A-I.

And now, on to the million-dollar question… are the moon photos taken by my Galaxy S23 Ultra… fake? I believe what’s causing the misunderstanding here is the difference between taking a “photo” of the moon and getting an image of the moon, and whether you’re OK with one or the other.

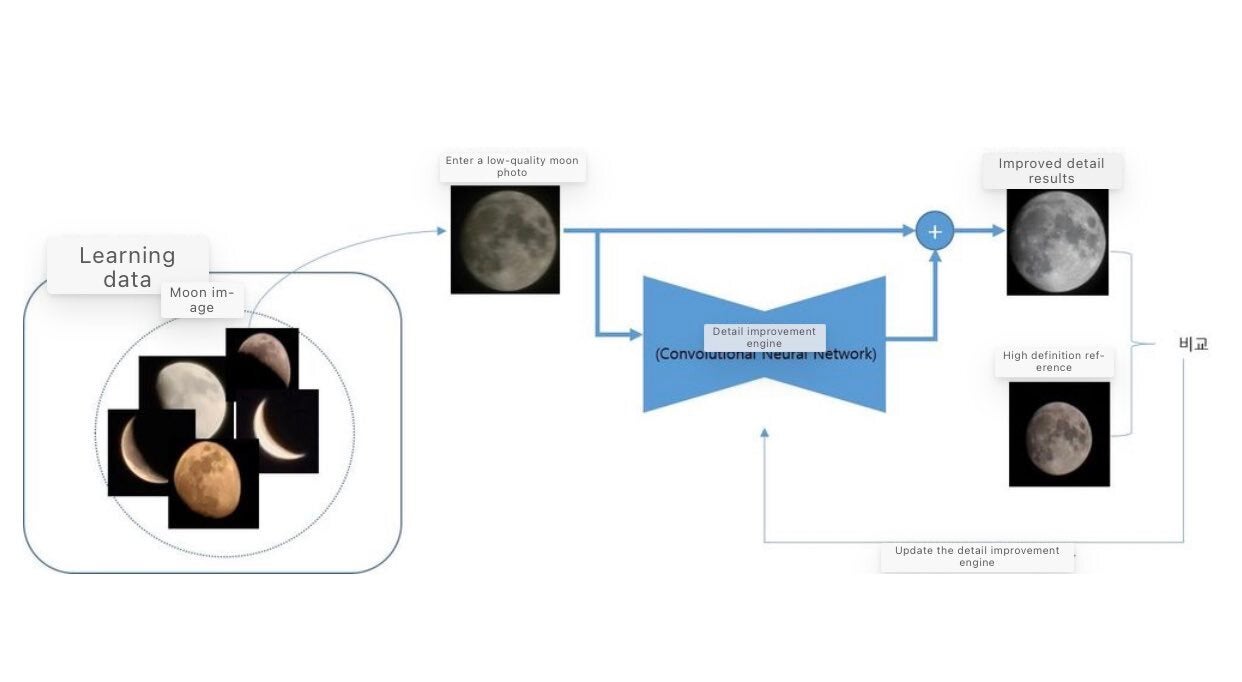

What the Galaxy S23 Ultra is doing (that’s also according to Samsung’s own explanation), is that when it takes a photo of the moon, the phone does actually use an AI learning algorithm to reconstruct lost detail in the moon. That’s possible because the moon and Earth are tidally locked, meaning you’ll always see the same “face” of the moon, which also makes Samsung’s training algorithm fairly straightforward.

The Lunar detection engine was created with the AI learning the different stages and shapes of the moon, from full moon to new, as perceived by people on the planet. It uses an AI deep learning model to detect the moon and identify the area it occupies (square box) in the relevant image. Once the AI model completes learning, it can detect the area occupied by the moon, even in images that were not used in the training.

So, yes, Samsung’s “Lunar detection engine” (have some of that, Dynamic Island!), is trained with different images of the moon, and in different moon phases in order to enhance your original photos once you go past the 25-30x zoom mark.

This confirms the theory that the moon photos you take with your Galaxy flagship are indeed altered to look clearer and better with the power of AI. Here’s the whole process (according to a very recent post by a Samsung Community Manager):

- The Galaxy camera provides clear pictures of the moon traversing several steps, using different AI technologies, in the process

- The scene optimizer technology automatically detects the scene shot, if the focus is right. It adjusts the configuration values to improve the resolution of the image after

- Also, the Zoom Lock feature ensures a clear preview by correcting the effect of an unsteady grasp

- You just have to press the shutter button after the moon is framed in a desirable composition and location. The Galaxy camera takes multiple shots and combines them later to remove any noise in the final image

- Finally, the image is complete with just the right amount of brightness and clarity using detail enhancement technology. It elevates the detail on the respective pattern of the moon

And here’s Samsung fresh new press release that addresses the “Moon Shot” debate. What’s particularly interesting is the closing sentence of the press release says Samsung will be working on its Scene Optimizer (AI algorithm) to make sure it’s less likely to be fooled - like in the experiment we just conducted.

Samsung continues to improve Scene Optimizer to reduce any potential confusion that may occur between the act of taking a picture of the real moon and an image of the moon.

Samsung

Yes, Samsung’s moon photos are sort of “fake” but the people who use Snapchat filters shouldn’t really care, right?

My Galaxy S23 Ultra took these at 30 and 100x zoom. But are they photos of enhanced images? Does it matter to you?

My verdict will leave everyone disappointed (love to do that), as I’m not about to “cancel” Samsung. But I’m also not about to cancel those who accused the company of faking moon photos.

I suppose it all comes down to whether and to what extent it’s OK to alter a photo until it becomes a whole different composition. And to come to Samsung’s “defence”, I’m not sure if enhancing a blurry image of the moon (adding something to a photo that wasn’t originally there) is any different than Magic Erasing a stranger from the background of your photo (removing something from a photo that was originally there).

But also, I’m not here to defend Samsung. In my view, if I wanted to see a clear photo of the moon, I’d just Google it. Isn't the whole point of capturing a moment in time (a photo) for that image to be unique and special?

How special is a relatively low-res photo of the moon with zero context, when I can download a similar, much clearer image from the internet? And how special is that “photo” when literally everyone else with a Galaxy Ultra can take it? It's literally the moon on a black background.

- Is it OK to use AI to enhance photos of the moon? Why not? Our phones already make the grass greener, the sky bluer, and our skin… better

- Does that make the Galaxy S23 Ultra’s moon photos fake? If you think of them as “photos” in the purest term, then yes; but not if you think of them as a “processed image”, which is what they are

- Does it matter? If you ask me, not really; if all you want is a clear photo of the moon, then you probably don’t care about the details

- Could Samsung have been more forward about how the tech works? Yes, totally; it’s clear that Samsung’s marketing team is trying to sell the “dream” of moon photos without much explaining but its come to a moment when they’ve now had to come out clean - which they did

I suppose it all comes down to whether and to what extent it’s OK to alter a photo until it becomes a whole different composition. And to come to Samsung’s “defence”, I’m not sure if enhancing a blurry image of the moon (adding something to a photo that wasn’t originally there) is any different than Magic Erasing a stranger from the background of your photo (removing something from a photo that was originally there).

How special is a relatively low-res photo of the moon with zero context, when I can download a similar, much clearer image from the internet? And how special is that “photo” when literally everyone else with a Galaxy Ultra can take it? It's literally the moon on a black background.

Photos of the moon? Huawei P60 Pro might soon let you take photos with the moon! Samsung will likely take inspiration for the Galaxy S24 Ultra, so get ready, internet...

The Huawei P60 Pro might reclaim the company's crown as the best Moon Shot-taker. But how fake is fake enough?

That being said, it’s not like the moon and other celestial objects aren't something worth taking a photo of (quite the opposite). Ironically, it’s Huawei (the original Moon Mode gangster) that might have figured out a way to justify the use of AI enhancement to take clear photos of the moon!

In a photo shared by Huawei CEO Richard Yu, we see something that even phones as powerful as the Galaxy S23 Ultra have not been able to do until now - a colse-up photo of the moon with context - an object (located on Earth!) in the background.

I’m pointing out the obvious here, but if Huawei’s new P60 Pro flagship is able to give you a clear image of the moon while having a building or the a person in the same frame, then I feel like moon photos suddenly become a bit more special, personal, useful, and justifiably “enhanced”.

Does that make them genuine? Maybe not, but it looks like a phone will soon be able to take a photo of you and the moon at the same time. You don’t care how Snapchat manages to give you dog ears… You just know you want dog ears, and Snapchat gives them to you.

In a photo shared by Huawei CEO Richard Yu, we see something that even phones as powerful as the Galaxy S23 Ultra have not been able to do until now - a colse-up photo of the moon with context - an object (located on Earth!) in the background.

Does that make them genuine? Maybe not, but it looks like a phone will soon be able to take a photo of you and the moon at the same time. You don’t care how Snapchat manages to give you dog ears… You just know you want dog ears, and Snapchat gives them to you.

So… IDK. Do we blame the AI, or do we... thank the AI?

Follow us on Google News

![A new Android bug is making it impossible to install new apps. Are you affected? [UPDATE]](https://m-cdn.phonearena.com/images/article/176703-wide-two_350/A-new-Android-bug-is-making-it-impossible-to-install-new-apps.-Are-you-affected-UPDATE.webp)

Things that are NOT allowed:

To help keep our community safe and free from spam, we apply temporary limits to newly created accounts: