Apple previews useful accessibility features coming to iOS 17

Apple today previewed some new accessibility features that will appear in iOS 17. Assistive Access filters certain apps down to their most "essential features" to help those who have cognitive disabilities. With Assistive Access, customized Phone, FaceTime, Messages, Camera, Photos, and Music experiences are all combined into a single Calls app. High-contrast buttons and large text labels are used.

For iPhone users who would rather communicate visually, the Messages app includes an emoji-only QWERTY and the ability to record a video message to send to loved ones. Additionally, with Assistive Access, users can select between a grid-based home screen layout that is more visual and a row-based layout for those who find it easier to process text.

Assistive Access makes iPhone easier to use for those with cognitive problems

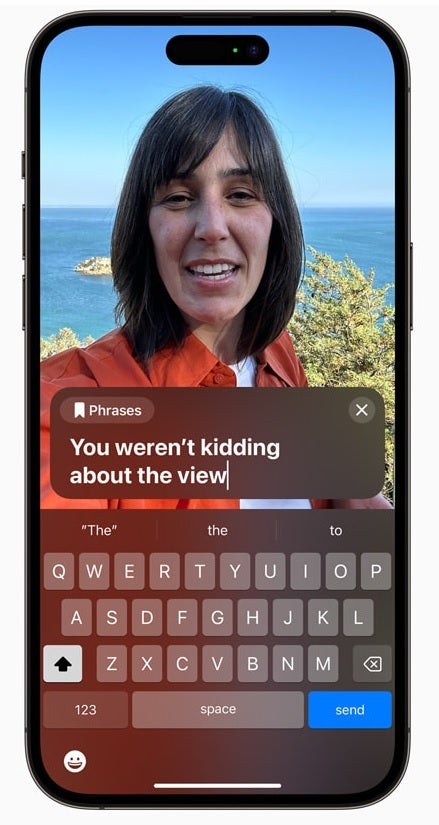

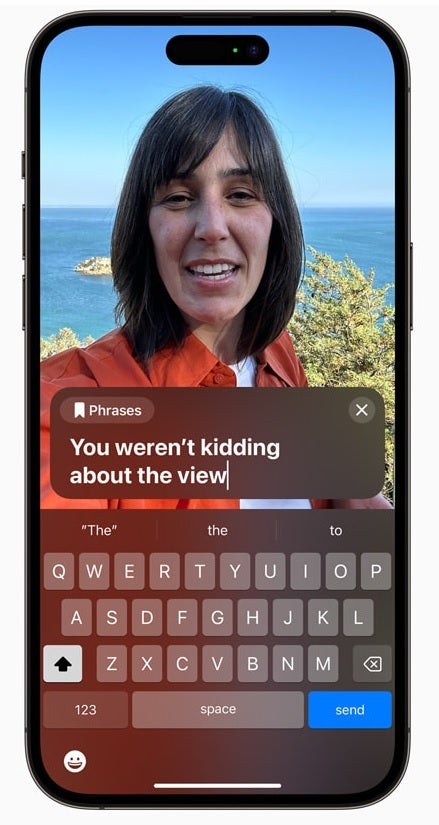

With Live Speech, which will be available on iPhone, iPad, and Mac, during phone calls and FaceTime calls a user can type what he wants to say and have it spoken out loud by his device so that it can be heard by the other parties on the voice or video call. Phrases often repeated by the user can be saved and quickly inserted into conversations with friends, family, and co-workers. Apple points out that Live Speech is "designed to support millions of people globally who are unable to speak or who have lost their speech over time."

Live Speech allows those with talking disabilities to type out what they want to say on a voice or FaceTime call and the phone will announce it

For those in danger of losing their voice (as Apple notes, it could be a person who has recently been diagnosed with ALS (amyotrophic lateral sclerosis) or has a disease that progressively makes it harder to talk, a feature called Personal Voice will have them reading a random set of text for about 15 minutes on an iPhone or iPad. This feature integrates with the aforementioned Live Speech so that an iPhone or iPad user who has lost his/her voice can still talk with others using a recorded version of their voice.

Personal Voice lets an iPhone user record texts and phrases on the device to use with the aforementioned Live Speech feature

Another accessibility feature called "Point and Speak in Magnifier" helps those with visual disabilities read text labels on the buttons used to run household items. Using the rear camera, the LiDAR Scanner, and on-device machine learning, the user can focus his or her iPhone camera on the control panel of a microwave oven for example, and as the user moves his fingers over the image of a button on the touchscreen, the iPhone will announce the text associated with that button. This will help those who can't see well still have control over some portions of their lives.

These are all useful features that we might hear more about when Apple previews iOS 17 at WWDC on June 5th. Apple CEO Tim Cook says, "At Apple, we’ve always believed that the best technology is technology built for everyone. Today, we’re excited to share incredible new features that build on our long history of making technology accessible, so that everyone has the opportunity to create, communicate, and do what they love."

Things that are NOT allowed: