Apple's Visual Look Up is modeled after Google Lens

At the WWDC event livestreamed on June 7, Apple spoke about a new feature that is coming to the iPhone, iPad, and Mac this year.

The feature in question is Visual Look Up, which Apple announced will leverage artificial intelligence software to recognize and classify objects found in photos. It didn't receive any huge amount of attention during the livestream and almost appeared as an afterthought, following in the shadow of Apple's detailed introduction of Live Text (which is another cool feature, by the way).

However, it certainly caught our eye, and probably the eye of anyone who is familiar with Android's Google Lens. In fact, it seems to be copying exactly what Lens does, even if it's coming a little late to the game.

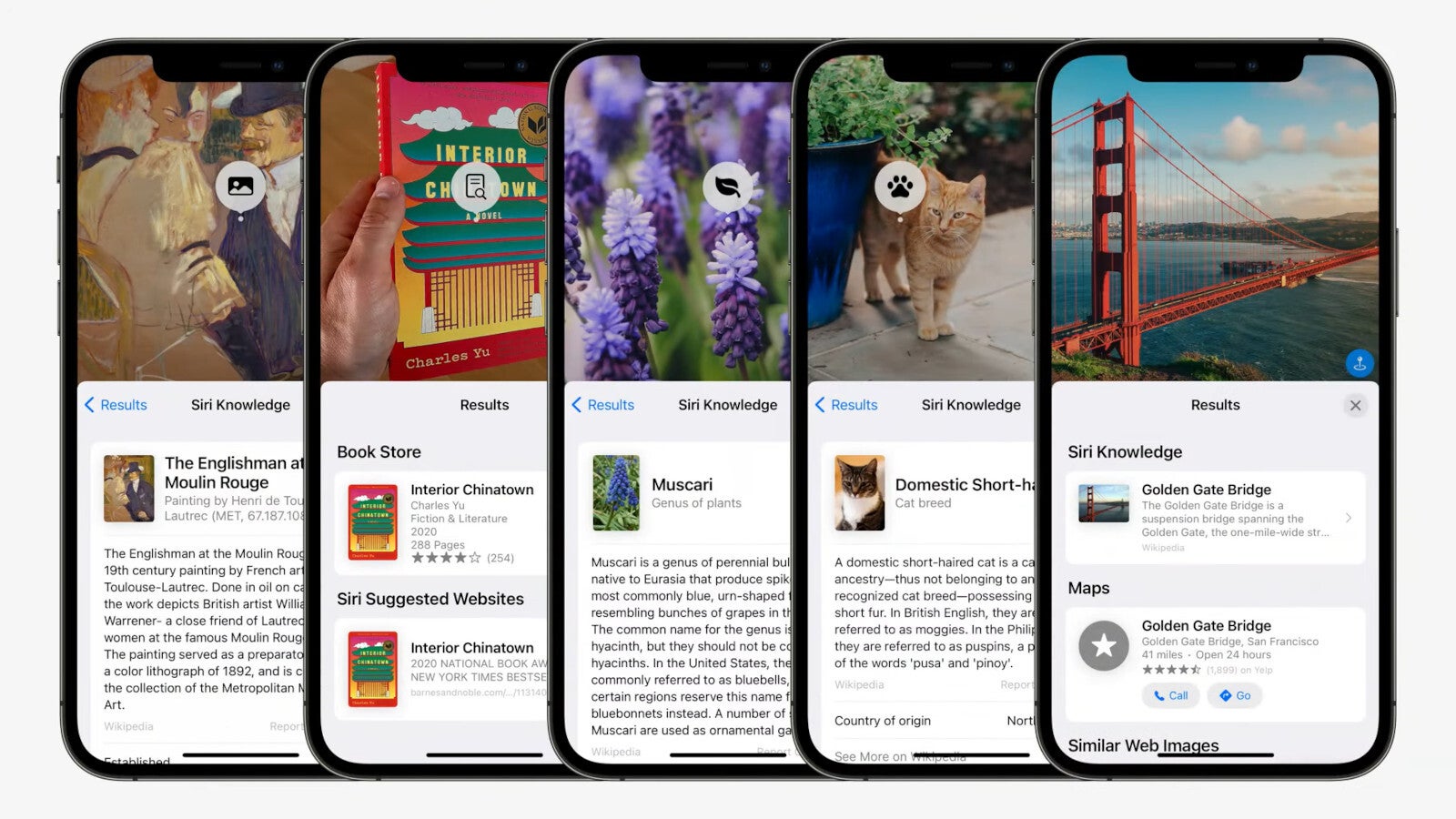

What Visual Look Up is soon going to do is recognize a variety of three-dimensional elements captured in your photos, and allow you to look up information on them by pressing the little interactive pop-up that will appear on top. According to Apple, it will easily help you classify things like the breed of a dog, the genus of flower, the name and geographical location of a particular landmark, and so on.

The coverage of Visual Look Up on Apple's news release, following WWDC, is a sparing summary as follows:

With Visual Look Up, users can learn more about popular art and landmarks around the world, plants and flowers found in nature, breeds of pets, and even find books.

The livestream showed about as much, with a single row of screenshots showing exactly what is listed.

How will it compare to Google Lens?

With all of that taken into account, Apple's Visual Look Up is in hardly as an impressive or versatile stage of development as Google Lens, which came out in 2017 and has hugely improved since then.

Google Lens does identify dog breeds, and plants, and landmarks, too—but that's only the beginning of its capabilities. Google has developed Lens to where it can scan and recognize three-dimensional shapes through the camera lens, and look up nearly any product or object by searching out similar photos on the web, telling you where you can buy it and for how much.

Beyond that, Google Lens can extract words from photos and convert them into text format, directly copying them to paste wherever you want. While all those functions lie under the wide umbrella of Google Lens, Apple has also just adopted this photo text feature and is launching it separately with iOS 15, calling it Live Text.

Google Lens and Live Text both allow you to focus your phone's camera on text containing contact info—such as a business card—and it will instantly analyze it and prompt relevant actions. For example, you can instantly call or message a phone number visible in the frame, or send out an e-mail to the e-mail address.

With Google Lens you can also translate text in real time, superimposed onto the screen in augmented reality as you scan your surroundings. Apple's Live Text will also support translation, but it freezes the image and superimposes it there—rather than Lens's AR style.

Apple has, unsurprisingly, been accused of copying Google in the area of AI-powered Visual Look Up, but the intelligent photo-analysis technology was bound to reach its devices sooner or later. This can't justly be called plagiarism, but more like following in the footsteps of inevitable progress.

Google Lens was a feature originally only planned for Google's own Pixel line, but has quickly grown and spread beyond that. On the other hand, we very much doubt Visual Look Up will ever be available for Android. But that's to be expected, as Apple has always leaned towards creating a closed ecosystem whose components only work best with each other.

We do expect Apple is going to do something special with Visual Look Up in the future, even if it only has a limited set of functions just yet.

Things that are NOT allowed: