Virtual personal assistants can be hijacked by subliminal messages embedded in music

We've seen how a young child can place an order for a dollhouse using Alexa, and how a television anchorman repeating the story accidentally caused other Echo units to order a dollhouse too. Now comes a story that is even scarier in its implications. A report in Thursday's New York Times revealed that students from University of California, Berkeley, and Georgetown University were able to hide subliminal commands for virtual personal assistants inside white noise played over loudspeakers. The commands included enabling airplane mode and requesting a website on a smartphone. That was back in 2016.

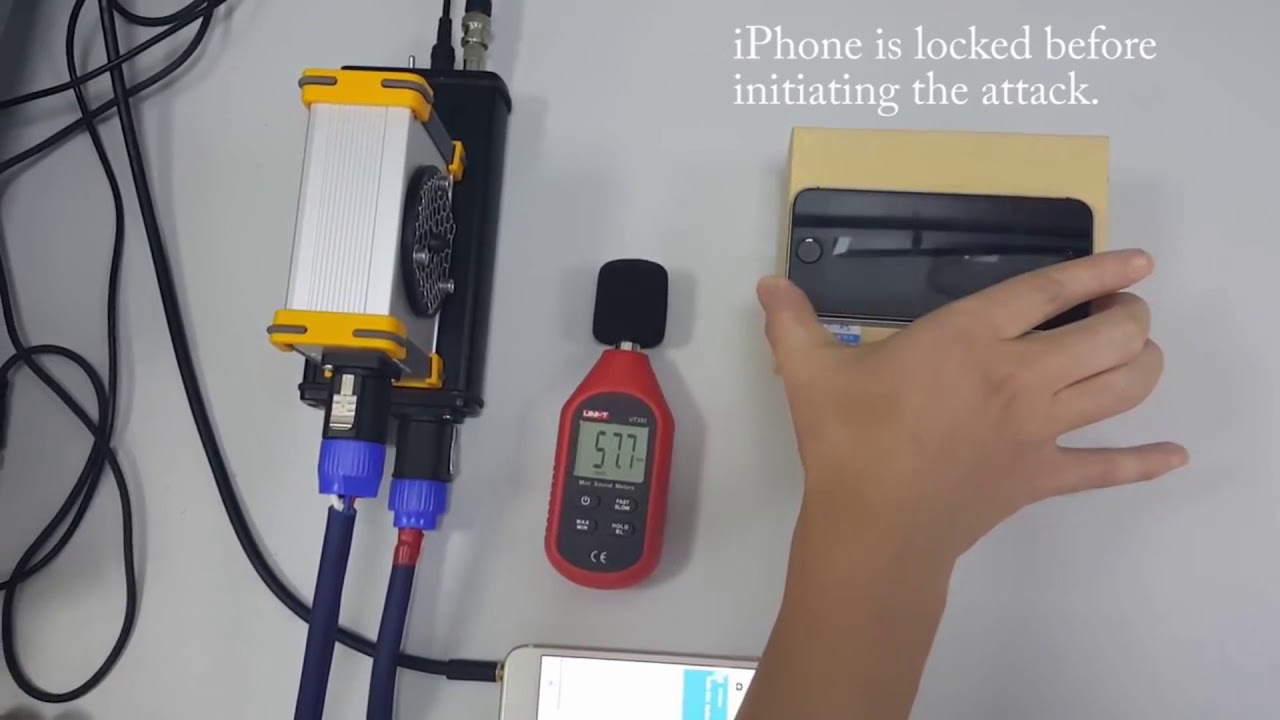

A technique called DolphinAttack shows that subliminal messages can even be embedded in sounds inaudible to the human ear. Check out the video at the top of this story. While DolphinAttacks require the transmitter to be placed in close proximity to the smart device receiving the hidden message, last month researchers were able to send these subliminal message via ultrasound from 25 feet away.

Perhaps the scariest scenario was tested by Chinese and American researchers who discovered that virtual personal assistants would follow commands hidden inside music played from the radio or over YouTube. So if your phone or smart speaker starts acting funny and performs tasks that you didn't request, it could be reacting to a message embedded in a song you are listening to on the radio. Or, it could be coming from a message embedded in a sound that you can't even hear.

source: NYTimes

Follow us on Google News

![A new Android bug is making it impossible to install new apps. Are you affected? [UPDATE]](https://m-cdn.phonearena.com/images/article/176703-wide-two_350/A-new-Android-bug-is-making-it-impossible-to-install-new-apps.-Are-you-affected-UPDATE.webp)

Things that are NOT allowed:

To help keep our community safe and free from spam, we apply temporary limits to newly created accounts: