Hidden code reveals Google's plan to make the Pixel 4 camera even better

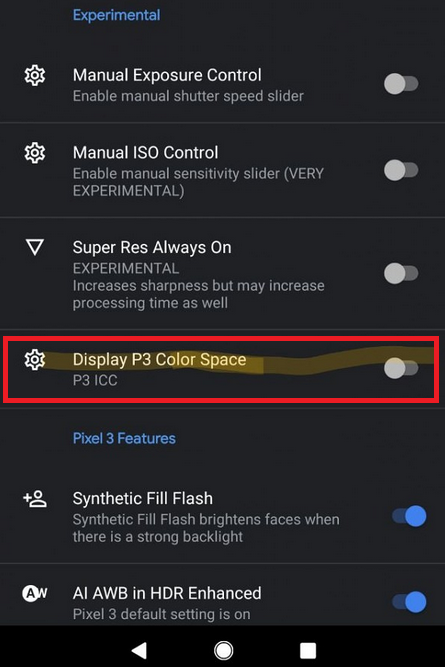

One of the best smartphone cameras on the market (if not the best) is about to get even better. XDA reports that code found in the Google Camera app by one of its senior members allows for wide-gamut P3 color capture. This is the same imaging system used with the iPhone cameras and captures a range of colors that is 25% more than the amount captured by the sRGB system currently employed on Android phones. We could see this wider range of color capture on the Pixel 4.

Apple started using wide-gamut P3 capture with the iPhone 7, and Google said last year that it would be bringing wide-gamut color to Android. At that time, XDA found photo samples from a leaked Pixel 3 that contained a Display P3 embedded color profile, but when the phone was released Google had switched back to sRGB. Apparently, all sensors can capture colors outside of the sRGB color gamut, but right now Android apps won't support it. Certainly, no social media sites on Android can show wide-color photos from iOS users correctly. And if an iOS user shares such a photo with an Android phone, the out of range colors are replaced by the closest color found in the sRGB gamut.

Google is making big changes with the next generation of Pixel phones. Based on a teaser disseminated by Google itself (in response to some renders that were widely circulated), the Pixel 4 will sport an iPhone 11-like square camera module in the upper left corner of the phone's rear panel. The two-tone look on the back is gone. And we couldn't help thinking that when Google tweeted about the Pixel 4, "Wait 'til you see what it can do," it was talking about the rumored Project Soli integration. The latter is Google's sensing technology and allows a device to be controlled using subtle, fine hand gestures. Radar is used to track "micro motions" of the hand. And since it doesn't appear that a fingerprint button can be found on the phone, there is talk that Google is borrowing from Apple again by putting all of its eggs into one secure facial recognition basket. In other words, the Pixel 4 could be equipped with a 3D mapping technology, possibly using a Time-of-Flight (ToF) sensor, that will be able to map faces similar to the structured light system used by Apple. The Pixel 4 will reportedly be equipped with five front-facing sensors.

Google wants to challenge Apple and Samsung with the Pixel line

Google apparently understands that the Pixel line must now take the next step up if it wants to challenge the iPhone and Samsung's Galaxy S line at the top of the smartphone food chain. But it will take more than adding some new features and shining up the hardware to get there. Since the OG Pixels launched in 2016, the line is known to have required some fine tuning from Google to get rid of some annoying bugs that showed up following the release of each new generation's models. Things were so bad with the Pixel 2 and Pixel 2 XL that a law firm advertised for owners of these two devices so that it could start a class action suit against Google, HTC and LG. Software updates from Google eventually fixed the problems and after the first few weeks, each Pixel was stable with no major issues raised.

The Pixel 4 will have a square camera module similar to what we expect on the iPhone 11

Certainly, the Pixel launches were nowhere near as disastrous as say the BlackBerry Storm (still the gold standard for half baked phone releases) but it certainly is time for Google to crack down and prove that it can hang with Apple and Samsung in the handset market. The Pixel 4 needs to come out of the box without any negative surprises. And if all of the rumors are right about the addition of the wide-gamut P3 color capture and the use of Project Soli's sensing technologies, we could see Google finally deliver the handset we knew it could create.

The Pixel 4 could employ wide-gamut P3 color capture

Things that are NOT allowed: