Can Pixel 4's radical Motion Sense navigation spell 'the end of the touchscreen'?

Google's Project Soli sounds suspiciously like what LG did with the Multi ID and hand-tracking algorithms enabled by the 3D-sensing front camera kit on the G8 ThinQ, yet relies on radar waves to detect the motion of the human hand. You know, like in this GIF below:

So, what can you do with Motion Sense on the Pixel 4?

You can also swap songs in Spotify and YouTube, and the display will emit a subtle glow when the gesture is executed so that you know that your command has been successful, like we showed you yesterday. You can use it to snooze alarms, dismiss timers, and silence incoming calls with a wave, and features can be turned on and off from Settings>System>Motion Sense.

The funny part of using Motion Sense will be for gaming and the so-called “Come Alive” series of wallpapers that respond to your motions like the Pikachu one that Google showed during the presentation. You can pet the Pokemon, and it will apparently wake up when you reach for the phone. Specialized games are also in, and Google promises much more features for Motion Sense down the road.

We waved Pikachu to sleep

How does Motion Sense work?

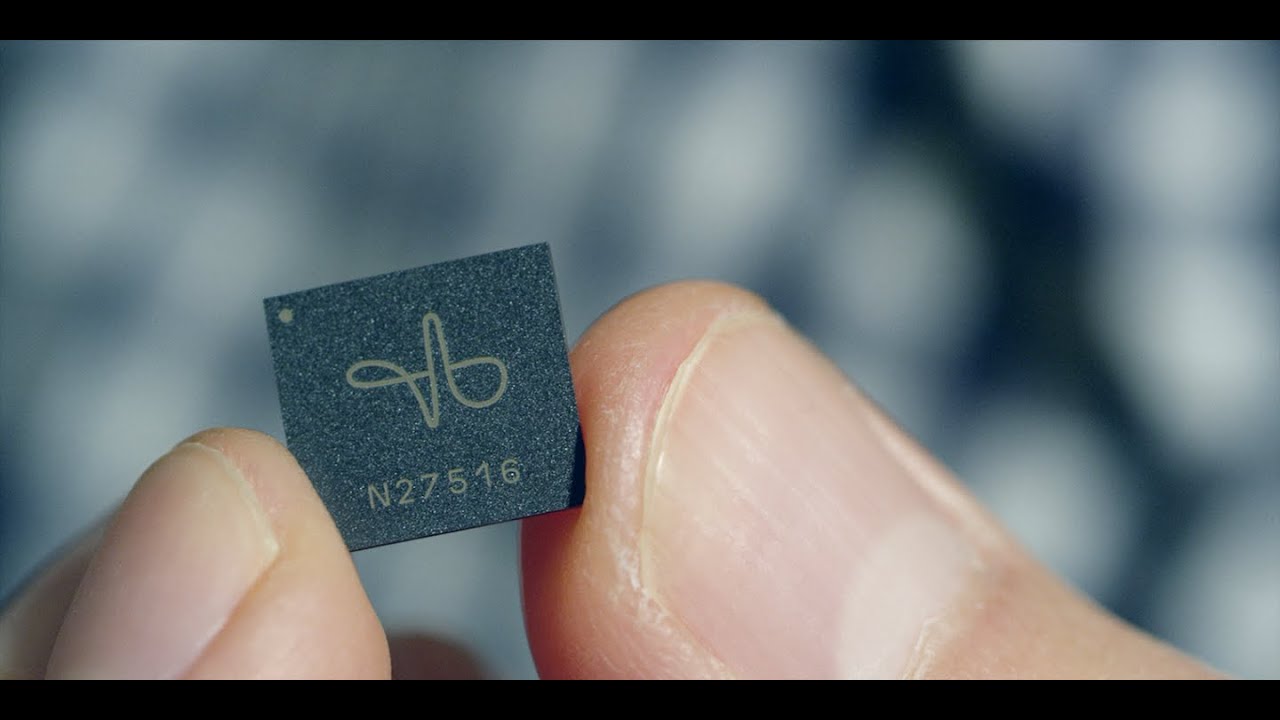

Google's successful miniaturization of the technology fits in a Soli chip that is small as a pinky nail, yet can detect the minutest of motions. It works on the same principle as the big flight radars that detect airplane movements in the sky. Unfortunately, this was precisely why the FCC didn't let the Soli chip fly until December 31 2018, when it granted Google a waiver from some of its requirements for radars in the commercial 57-64 GHz frequency band with the following:

By this Order, we grant a request by Google, LLC (Google) for waiver of section 15.255(c)(3)2 of the rules governing short-range interactive motion sensing devices, consistent with the parameters set forth in the Google-Facebook Joint ex parte Filing, to permit the certification and marketing of its Project Soli field disturbance sensor (Soli sensor) to operate at higher power levels than currently allowed. In addition, we waive compliance with the provision of section 15.255(b)(2) of the

rules to allow users to operate Google Soli devices while aboard aircraft.

We find that the Soli sensors, when operating under the waiver conditions specified herein, pose minimal potential of causing harmful interference to other spectrum users and uses of the 57-64 GHz frequency band, including for the earth exploration satellite service (EESS) and the radio astronomy service (RAS). We further find that grant of the waiver will serve the public interest by providing for innovative device control features using touchless hand gesture technology.

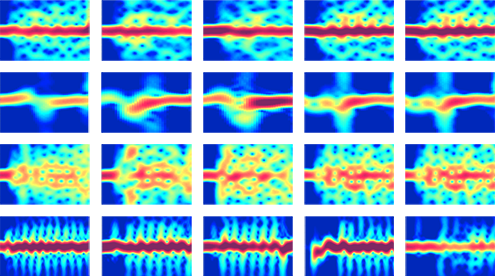

Google claims that the radar and the accompanying software can "track sub-millimeter motion at high speeds with great accuracy." The Soli chip does it by pushing out electromagnetic waves in a broad sweep that get reflected back to the tiny antenna inside.

A combination of sensors and software algorithms then accounts for the energy these reflected beams carry, the time they needed to come back, and how they changed on the way, being able to determine "the object’s characteristics and dynamics, including size, shape, orientation, material, distance, and velocity." Despite that the small chip can't really bring the spatial recognition of larger installations, Google has perfected its motion sensing and predicting algorithms to allow for slight variations in gestures that will be transformed into one and the same interface action.

The Soli radar algorithms can recognize gesture variations brought on by different users

The technology is thus superior to the 3D-sensing cameras at the front or back of some phones which depend on line of sight and lighting conditions. Here's the initial brief on Google's Soli tech that will debut on the Pixel 4 in a retail version for the first time.

Motion Sense brings a universal set of gestures

Hand gestures come more natural than pushing against a piece of glass, yet so far the technology for their recognition on a phone has been imperfect as it relied on camera sensors. Google is aiming to revolutionize the interaction with our mobile devices by employing the radar-based Motion Sense technology which would include, but not be limited to, the following natural gestures that can be employed in any orientation of the phone, day or night.

Button

Slider

So, can the world be your interface?

Dial

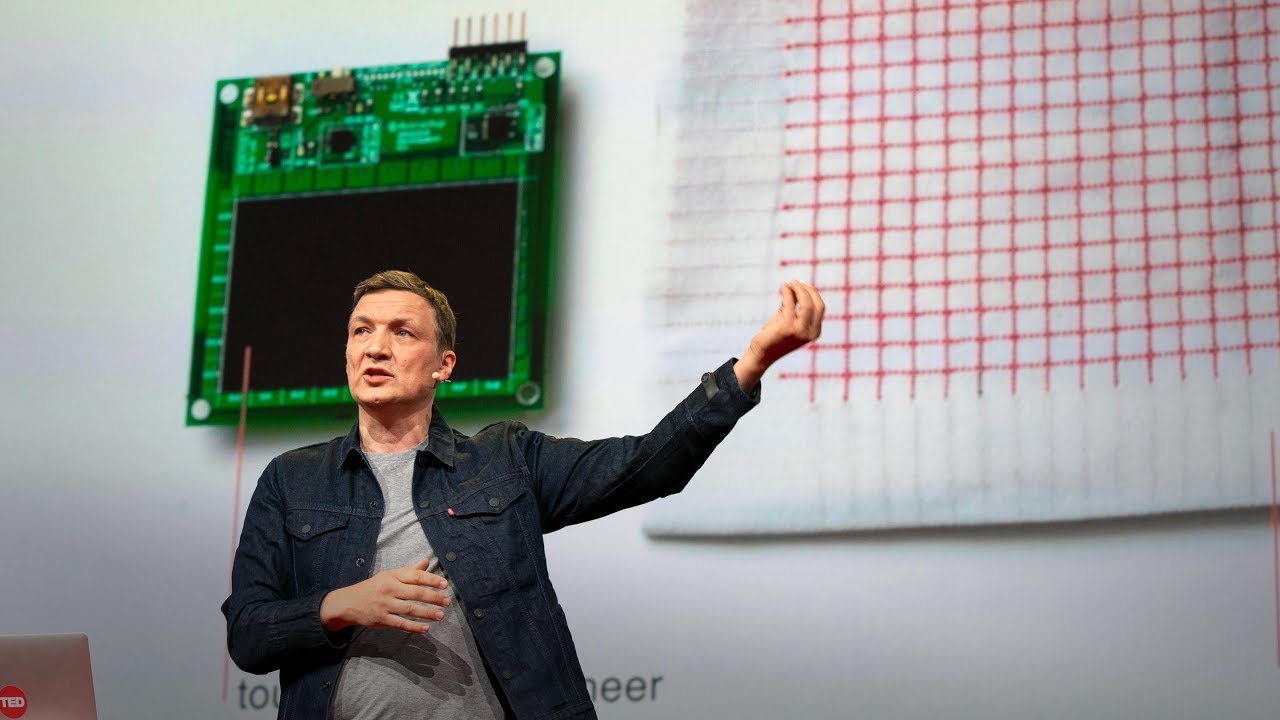

In fact, the guy behind Project Soli, Google's Ivan Poupyrev that you saw in the video above, recently gave a TED talk explaining how this radar-based gesture navigation can be deployed everywhere in a "the world is your interface" kind of moment.

While we can't really comment on the practicality of Google and Levi's Project Jackard idea that employs the motion sensing gizmo in a jeans jacket, getting it into the Pixel 4 is a whole different ball game.

Levi's Jacquard jacket has a Soli radar as a snap tag

Google's Pixel 4 and Motion Sense

Just when we were preparing this primer on Project Soli, Google came out confirming that this will indeed be the tech occupying the mysterious openings at the right of the thick top bezel on the Pixel 4. In its blog post, the company went through the same points and advantages we list above in more detail. Apparently, it all ties up with Google's head of hardware Rick Osterloh "ambient computing" strategy which he explains as:

Our vision is that everything around you should be able to help you. And so many things are becoming computers that we think the users should be able to seamlessly get help wherever they need it from a variety of different devices.

What's a bit worrying, however, is that Google lists the Motion Sense abilities on the Pixel 4 as "skip songs, snooze alarms, and silence phone calls, just by waving your hand." Not for nothing, but those are the things that LG does on the G8 ThinQ just with the front camera kit, no fancy miniaturized radars, no bezelicious sprawling at the top.

What about scrolling with an air flick of the finger through long articles, or going back in the interface with a simple thumb twitch, though? Google does wax poetic that this is just the start and "Motion Sense will evolve," but we've heard many a marketing writeups for options and features that ultimately prove to be slow on the uptake.

That Motion Sense "will be available in select Pixel countries" bit is also raising a few eyebrows, as to why would a Pixel 4 model in one place come with the radar-based gesture navigation, while in others it won't. Is it because different countries have different rules on commercial radars in the 57-64 GHz frequency band and some are reluctant to accept the FCC's waiver? Yep, Google now lists that it will be "functional in the US, Canada, Singapore, Australia, Taiwan, and most European countries," while availability will be "coming soon in Japan."

What do you think, could Pixel 4's Motion Sense be the "end of the touchscreen" and the beginning of the "world is your interface" era indeed, and is it too early to tell, or too complex of an interaction paradigm to take off?

Follow us on Google News

Things that are NOT allowed:

To help keep our community safe and free from spam, we apply temporary limits to newly created accounts: