Google's Pixel phones have some of the best cameras on the market. Shunning away from the dual-cam craze for a second year in a row, Pixel phones are nonetheless capable of delivering amazing results during the day and in low-light conditions. They can even do the oh-so-popular shallow depth of field simulation, otherwise reserved for dual-lens shooters, better known as "portrait mode." The remarkable thing is that Pixel phones do this entirely through software, since there's no second camera on board to help in measuring depth. And although phones with two cameras are generally better at assessing depth and separating the subject from the background, the Pixel 2 and Pixel 2 XL have thus far impressed us with what they can do.

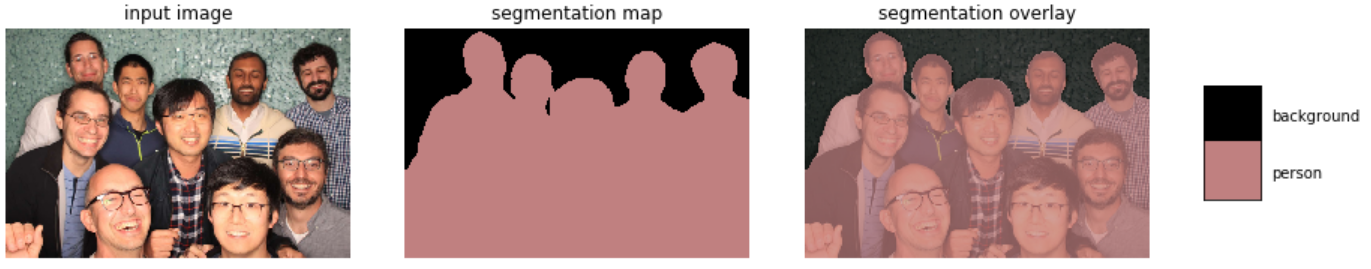

In true Google fashion, the company has now made the tool that makes portrait mode possible through software means only open source. The code that does the magic is called DeepLab-v3+, and it uses semantic image segmentation to identify and label different objects in an image. Through machine learning, DeepLab is capable of differentiating between "person" and "sky", for example, and it labels them as such, which then helps the further segmentation of the image into "layers" that can be separately processed in various ways.

Modern semantic image segmentation systems built on top of convolutional neural networks (CNNs) have reached accuracy levels that were hard to imagine even five years ago, thanks to advances in methods, hardware, and datasets. We hope that publicly sharing our system with the community will make it easier for other groups in academia and industry to reproduce and further improve upon state-of-art systems, train models on new datasets, and envision new applications for this technology.

Since DeepLab-v3+ is now open source, developers can freely implement it in their own apps! What's really cool is that this tech has a lot of potential uses, with portrait mode being just one of many.

Things that are NOT allowed: