Microsoft shows OmniTouch: its vision for the future of touch interfaces (hint: they’re everywhere)

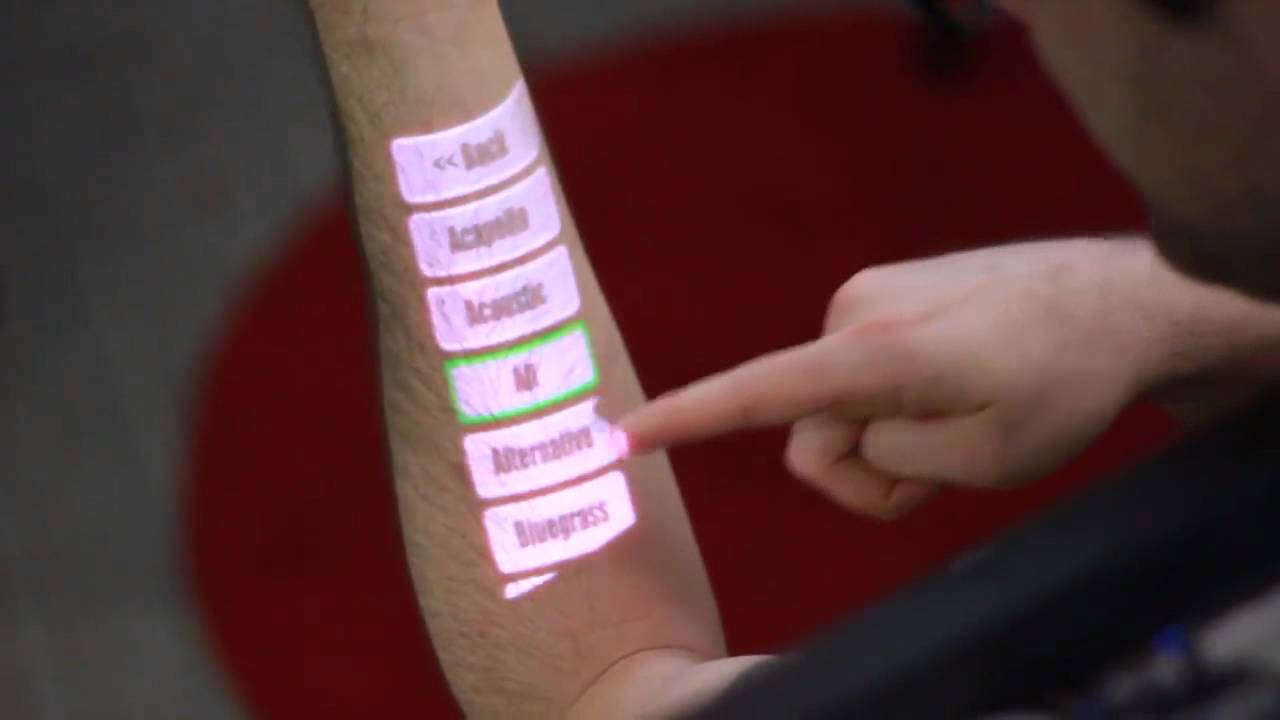

Now, here’s what we want to see more from Microsoft - innovation. Two teams at Microsoft Research have been working on two very exciting projects and presented their findings at UI symposium UIST 2012. The first project, OmniTouch, is the most eye-catching with its futuristic idea - turn every surface into a touch-enabled space.

How did they do it? How about plant a smaller Kinect-like device on your shoulder which allows you to interact with all surfaces using multitouch, tapping, dragging and even pinch to zoom. Sounds almost incredible, and the researchers admit that the first three weeks of developing the project were the hardest.

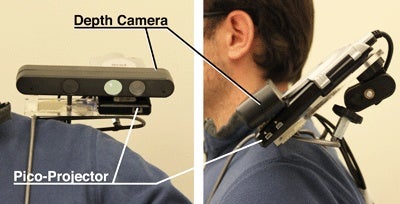

In order to do that they constructed that Kinect-like device consisting of a laser pico projector which would project images on all surfaces and a depth sensing camera, responsible for the magic. Tweaking the depth camera to recognize human fingers as the source of input, as well as adjusting the accuracy of depth recognition was key to the success of the project.

"Sensing touch on an arbitrary deformable surface is a difficult problem that no one has tackled before. Touch surfaces are usually highly engineered devices, and they wanted to turn walls, notepads, and hands into interactive surfaces—while enabling the user to move about."

The prototype you see on the image is not small at all, actually it’s ridiculously big for use in public spaces, but the research team agrees that there are no big barriers to miniaturizing it to the size of a matchbox. It could also be conveniently placed as a watch or a pendant.

The results exceeded expectations, and the only thing we have left now is wish these technologies arrive sooner to the mainstream.

source: Microsoft Research via CNET

Update: And if you've just had a deja vu, here's why:

Follow us on Google News

Things that are NOT allowed:

To help keep our community safe and free from spam, we apply temporary limits to newly created accounts: